Gigabyte G481-HA0 GPU Server Review

August 3, 2022If you’re looking for a GPU accelerated computational machine, then this Gigabyte G481-HA0 might be just the ticket (SHOP HERE). This system can support up to 10x PCIe-based GPUs with dual 2nd generation Intel Xeon Scalable processors. As a dual-root system, it offers the tools for AI, AI training, AI inference, Visual computing, and simulation plus other high-performance applications.

This is not a really “new, new” system as third generation Intel Xeon Scalable processors are available and this one will only support 1st or 2nd processors. Plus, this specific option doesn’t support SXM2 form factor GPUs, that would be the G481-S80, and you can see that video here. SXM2 does offer significant gains in performance over the PCIe-based GPUs. Of course, you will pay extra for that, especially those GPUs. In addition to the G481-S80, there are a few other G481 systems with slight differences to each, like storage conditions and GPU support, in general.

All that said, the G481-HA0 is a very worthy contender in the multi-GPU space. This is a dual root system, meaning the GPUs are split between the CPUs, with each in charge of 5x of the 10x GPUs housed in this system. It’s designed for large-scale deployments. If this was a single root system like the G481-HA1 it would have all of those GPUs routed through a single CPU first via the PCIe switches. That CPU would then communicate with the other CPU via the Ultra-Path interconnect. With this one, you need both of those processors or you only get half the goods.

There are several GPUS supported on this system including the Nvidia Tesla P100, and P40 featuring Pascale architecture, the V100 and V100s with Volta architecture for computational acceleration and the T4 with Turning architecture, an asset for distributed environments. There’s also the Nvidia RTX 8000 with active cooling and the A6000 with passive cooling, those are for applications involving rapid visualization for workstations or AI and Deep Learning applications. Also, a lone AMD Radeon Instinct M150 32G, also used for computational acceleration. The weapon of choice in this case, or at least with the highest memory bandwidth, is the Nvidia Tesla V100s general purpose GPU.

This is an interesting system. At 4U, there is quite a bit of storage up front placing raw data close to the CPUs for quick access to cached and large data sets. A tiered storage format offers 12x 3.5-inch SAS/SATA hot-swappable drive bays on the bottom, and another bank of 10x 2.5-inch hot-swappable drive bays on top. 8x of those are NVMe and have orange drive tray release tabs. The two adjacent drive bays, and those in the lower bays have blue drive tray release tabs indicating SAS/SATA HDD SSD support. On the top left, there is a PCIe port for the pre-installed RAID card.

On the right, a control panel has health status LEDs (HDD Status, and System Status) On/Off, ID, Reset, and non-maskable Interrupt buttons, plus a few ports including dual 1Gb Ethernet and a 1Gb Management LAN port with more LEDs indicating link activity and speed. Then there’s two USB 3.0 ports and a VGA port for a crash cart. You can also install an optional plug-and-play LAN module on the back of the system. That one can support dual 10Gb Ethernet LAN ports and a Server Management port.

All of that GPU-enriched high-performance computing can be managed by Gigabyte’s proprietary Server Management or GSM. This software suite is free of charge, delivering a faster ROI when compared to some other companies that charge a licensing fee to manage the system you just bought. It uses the AMI MegaRAC SP-X baseboard management controller solution and Aspeed AST2500 module, which is compatible with both Redfish API and IPMI 2.0. It’s used for both remote and at-chassis management of the system. GSM has several sub-programs to help monitors the system health. Also, a mobile application, GSM Mobile. It offers an easy-to-use browser-based user interface and supports a variety of features. Again, this software suite is provided free of cost and the mobile app is compatible with both Android and iOS operating systems.

Another, free-of-charge program is offered by Nvidia called Nvidia GPU Cloud or NGC. NGC provide a container registry and software that enables the creation of GPU accelerated containers for scale-up and scale-out multi-GPU and Multi-Node systems. Of course, Nvidia designed this for use with Nvidia products but we think you can still use it with the other GPUs supported on this system. It offers support for Docker & Singularity plus VMware Sphere and new containers are published monthly. NGC allows quick and easy deployment, significantly reducing setup time.

As you would guess, this system draws a lot of power to support up to 10 GPUs. Providing the juice are three 80 PLUS Platinum 2200W PSUs. I’m sure you noticed the PCIe slots above, which we will take a closer look at inside. That GPU fan module is for two top mounted GPUs. You will need to remove those fans and the GPU PCIe bracket inside to install GPUs.

Many of the drive cables from the backplane plug directly into the system board. As previously mentioned, a PCIe slot is perched right above the drive bays for a pre-installed HD/RAID controller and expander board. It provides support for SAS at 12Gb/s or SATA at 6Gb/s.

A slot on the system board supports a virtual RAID on CPU, or vROC, key for use with solid state drives and NVMe. The cables for the NVMe drives plug directly into the motherboard. The vROC key is included with the system but only works with Intel based NVMe SSD drives. The motherboard has two CPU sockets each in charge of 12x memory module slots, with 6x to either side.

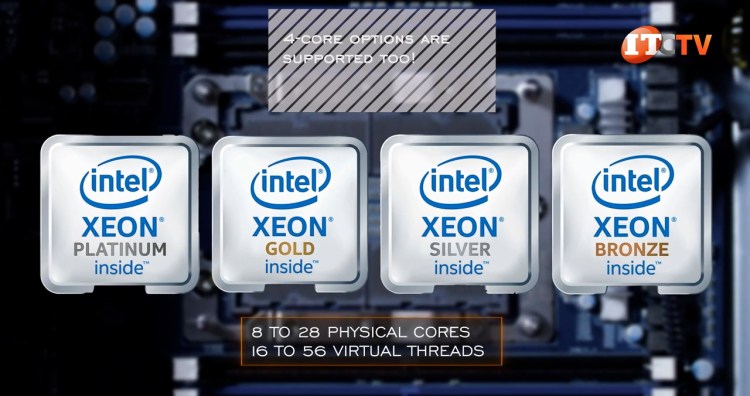

This platform can use Platinum, Gold, Silver, or Bronze CPUs, with 8 to 28 physical cores and 16 to 56 virtual threads. Each of the processors also supports 6x memory channels and 12x memory module slots with variable memory speeds depending on the CPU, memory module and configuration.

In addition to Load Reduced or Registered memory modules, this system can also be outfitted with up to 3TB of data-centric persistent memory modules. Persistent memory modules offer resiliency and speed in 12x of the 24x slots but are only compatible with 2nd generation Intel Xeon Scalable processors. The remaining 12x slots would be outfitted with registered memory modules matching the total number of installed Persistent Mem modules in the adjacent slot. DCPMMs also have a caching feature, which regular DRAMS do not. They are also persistent, like flash memory, resulting in better data protection in the event of a power or system failure. You also get support for more memory in general with 2nd generation CPUs.

For the top memory speed of 2933MT/s you will also need those 2nd Gen processors. What you can only get with the Skylake, first gen processors, is support for the “F” series Omni-fabric processors which were discontinued with the release of Gen 2 CPUs. With that option, you could install 4 QSFP28 LAN ports for a total 100Gb/s bandwidth bypassing the PCIe lanes with a direct cable from the I/O controller to the CPU.

Just past the system board is a row of 6x dual fan modules, which bridge the gap between the motherboard and the PCIe board. Between the connection from the CPUs to the GPUs is a PCIe switch making all this PCIe bandwidth for 10x full height, full-width Nvidia Tesla V100s GPUs possible. Each of the GPUs fits in a PCIe gen 3.0 x16 slots with a x16 PCIe link. Two of those GPUs are mounted in the PCIe bracket on top of the other 8x and that’s where that external fan bracket comes into play, ensuring air is pulled over those two GPUs and maintaining operational temperatures at peak performance.

On a side note, the Nvidia Tesla V100s GPU comes with 32GB natively and features Tensor cores with 1134GB/s memory bandwidth. It’s plugged as the most advanced data center GPU ever built to accelerate AI, high-performance computing, data science, and graphics. A title previously held by the V100. That said, perhaps the definition has not been updated since they released a few other GPUs. Namely the successor the Ampere-based A100 offering more than twice the performance of the Volta-based V100s. Technology…what can you say? Of course, those GPU servers outfitted with the SXM2 or SXM3 form factor GPUs use Nvidia’s NVLink technology enabling the GPUs to function as one, plus operating at speeds of up to 300GB per second compared to only 32GB through the PCIe bus with a PCIe-based GPU server.

Those two PCIe switches enable the PCIe bus to connect 10x GPUs with a x16 PCIe bus lane or 160x lanes! The switches facilitate orderly processing and caching enabling the seamless, and fast, processing of all of that data using only the 96 actual PCIe 3.0 lanes. That would be the combined PCIe lane total, available off the dual Scalable CPUs at 48 PCIe 3.0 lanes apiece. And we haven’t even thrown the NVMe drives into the mix yet!

Each of those NVMe drives requires a x4 PCIe 3.0 lane. With 8x of those, that’s an additional 32x PCIe lanes to add to the 160 used by the GPUs. Then, there is a single x16 slot at the back of the chassis that can be used for a high-performance I/O controller like a Broadband card offering up to 100GB/s transfer speeds.

And, no, we did not forget the RAID controller in front with the x8 PCIe slot. It may seem like your pistons are all firing at once but they’re not. They each take some micro-second breaks in between. And in this case, that caching and fast storage up front close to the CPUs.

Bottom line, the Gigabyte G481-NA0 GPU server is still very relevant in processing the needs of a modern data center or Enterprise business. It offers a balanced dual root system with 10x high-performance GPUs for superior data processing in large deployments.

Keep in mind, if you are looking for one of these, IT Creations has them. Oh, and if you have any questions on this or any other server, post them in the comments section below and maybe check out our other review of the G481-S80 GPU Server featuring SMX2 form factor GPUs and NVLink.