Gigabyte G481-S80 GPU Server Review

July 9, 2020The Gigabyte G481-S80 (SHOP HERE) is our second Gigabyte server review, which is also a GPU server, but from the Intel side of the aisle. We already reviewed the Gigabyte G291-Z20 GPU server a few weeks back offering a single AMD processor and up to 8x double-wide full-length GPUs. This one sports dual Intel Xeon Scalable processors and up to 8x SMX2 form factor GPUs with NVLink.

Introduction

The Gigabyte G481-S80 server is a 4U system with 8 GPUs. The previous Gigabyte G291-Z20 server also featured 8x GPUs, but was 2U and used the standard PCIe interface. Size aside, this system supports up to 8x NVIDIA Tesla P100 or V100 SXM2 modules, which uses an NVIDIA NVLink board to harness the full potential of GPU-to-GPU communications with a theoretical communications speed somewhere between 300GB up to 500GB/second compared to only 32GB/s using standard full-height, double-width NVIDIA GPUs with a standard GPU-to-CPU connection. Anyways, those SXM2 modules are smaller and thinner than the standard form factor. So, why is this system so big? Hopefully we will answer this pressing, question but let’s start with the front of the system, as usual.

Front of the Chassis

On the front of the chassis, there is a bank of 10x 2.5-inch storage bays, with 6x allocated for SAS or SATA storage and the other four bays on the far right supporting up to 4x NVMe drives. Above that are ports for a crash cart including a VGA port 2x USB 3.0 ports, an MLAN port, and two 1Gb Ethernet ports.

Adjacent to those ports are some tell-tale lights and buttons, including the power on button, ID button, reset button, HDD status LED, Non-Maskable Interrupt button if multiple-bit ECC errors occur, and a system status LED. A front-accessible PCIe 3.0 x8 slot on the far left for an MD2 card can provide additional NVMe or SAS/SATA storage or can be used to support a RAID controller.

Back of the Chassis

On the back of the Gigabyte G481-S80 you’ll notice 4x PSUs lined up along the bottom of the chassis for redundancy. These are the big ones at 2200W of 80 PLUS Platinum power. Right next to the last PSU is small hot-swap pull out LAN module that features an integrated gigabit network port and a slot for an optional OCP card for other network connection speeds.

Four low-profile slots above the PSUs are for additional networking. If you want to get the most out of this system, you’re probably going full 100GbE with InfiniBand by Mellanox. (NOTE: NVIDIA just purchased Mellanox so we can safely assume this partnership will only lead to greater levels of performance.)

Five pairs of cut outs on the back panel are for adding a liquid cooling solution, which this system is designed for. Gigabyte has already partnered with Allied Control and 3M for a two-phase immersion cooling solution that will not only protect your hardware from overheating, but also significantly reduce energy consumption in the data center.

Inside the Chassis

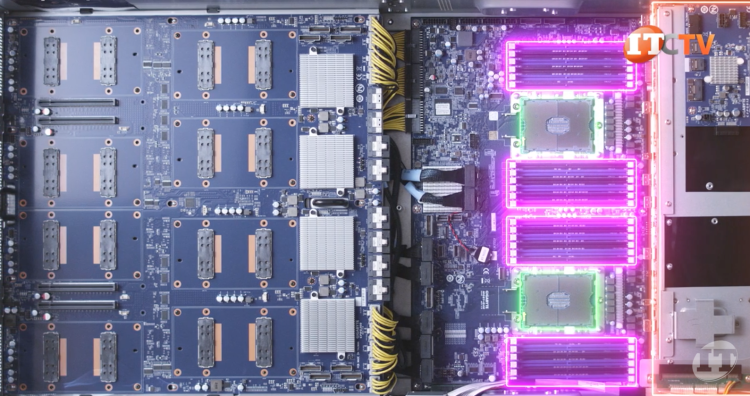

Once you remove the server’s cover panel, the interior is very neatly arranged. It’s divided into two distinct areas separated by the bank of 6x dual high-performance 120mm fan modules. Individual fan speed is adjusted automatically for the best cooling and power economy, but you can also manually create cooling profiles depending on your needs.

You’ve got the CPU and memory modules on one side along with the front storage section. On the other side, you have the NVLink board with 4x PEX PCIe splitters which process the data traffic to and from the CPUs, but also allow for more PCIe lanes. Without the PCIe PLX switches, each processor can only support a total of 48 PCIe 3.0 lanes or 96 total with both processors installed.

The Gigabyte G481-S80 can support eight SMX2 modules in a x16 lane, plus four more x16 lanes for InfiniBand network controllers. And then there are the two x8 slots: one at the front for an MD2 card, which is a SAS/SATA RAID controller, and then another for supporting the OCP LAN card in that hot plug module in back. So, in total we have up to 208 PCIe lanes thanks to those PCIe splitters. If you add the NVMe drive connectors at 2 x 8 for 16 more PCIe lanes and 4 lanes per NVMe drive, it bumps that total to 224 PCIe lanes!

There are two pairs of x16 PCIe 3.0 slots to support up to 4x Mellanox 100Gb/E connections. Those network cards are connected with one of the CPUs and two of the SXM2 form factor GPUs through the NVLink board. This design facilitates unhindered peer-to-peer communications and RDMA (Remote Direct Memory Access). This system is 4U after all the heatsinks are set up on the NVLink board!

NVLink vs PCIe

Now, what are the advantages of NVLink over PCIe? Low latency and throughput. Compared to a PCIe based GPU installation at up to 32GB/s this one delivers 300GB/s or almost a ten-fold increase in data flow. But it’s not just the NVLink board. Those SXM2 GPUs, in this case Tesla V100 GPUs, also have 6x bi-directional NVLinks featuring 25GB/s per link in each direction so communications between GPUs are super-duper fast. They’re significantly faster than what you can achieve with the PCIe based GPUs which use the PCIe bus to communicate with the other GPUs through the CPUs adding more latency to the mix. Peer-to-Peer communications and remote direct memory access are enhanced by the NVLink architecture which includes high-speed network controllers attached directly to the GPUs and CPU. It’s SUPER fast!

CPU and Memory

There are two sockets for 2nd generation Intel Xeon Scalable processors up to 205W and 28 cores. Each processor also supports 12x memory module slots for a total of 24 active memory module slots with two processors installed. A memory status LED is on the system board presumably for easy access. Also compatible are the Intel Processors with integrated OCP fabric connectors for reduced latency. With 2nd generation CPUs you have options for more memory compared to their 1st generation counterparts. That includes data centric persistent memory modules plus a few new processors with options for support of more memory, less power usage and other features.

The Gigabyte G481-S80 server will support up to 64GB Registered memory modules or 128GB Load reduced memory modules at speeds of up to 2666MHz. In fact, this system will support up to 12x 512GB DCPMM modules, but only with a compliment of Registered RDIMM modules. The configuration includes a single RDIMM and one DCPMM in each channel with the RDIMM installed first. Mind you, the overview of this system said 512GB DCPMM supported, but the specs go back to 12x 256GB. I’m going with 512GB modules supported, so that means over 6TB of DC persistent memory modules and an additional 768GB when you add in 12x 64GB RDIMMs for a total of almost 7TB!

The DC persistent memory modules can be allocated as either memory in Memory Mode or as storage in Application Direct Mode. Pretty sweet feature for both data resiliency and storage! The back plane on the system connects directly to the system board for support of both the SAS/SATA drives and the NVMe drives with ports on the motherboard. Although, you will need a discreet RAID controller for SAS implementations or more control over your storage.

For NVMe SSD support, the system comes with a virtual RAID on CPU key, which provides RAID options for the NVMe drives through the CPUs integrated Volume Management Device. The key that comes with the system is limited to Intel SSDs.

Management

At-chassis and remote management of the Gigabyte G481-S80 is through the Aspeed AST2500 controller module and dedicated management ports on the front and back on the system. The Gigabyte Server Manager (GSM) is Gigabyte’s proprietary management software —kind of like Dell’s integrated Dell Remote Access Controller with Lifecycle Controller or Integrated Lights Out on the HPE platforms. It’s compatible with both the Integrated Platform Management Interface (IPMI) and or Redfish (RESTful API).

So, what do you get with all that? You get an intuitive browser-based user interface with remote management and monitoring of multiple Gigabyte servers through the base management controller in each chassis. The GSM Agent is installed on each Gigabyte chassis and provides more granular data on each chassis like temperature, power consumption, fan speed, RAID information, CPU usage rate that can then be used by other GSM applications to allocate and manage resources. For management and integration with VMware vCenter, GSM Plugin is available. There is also a mobile application for both Android and OS operating systems called, you guessed it! GSM Mobile. The best part about Gigabyte’s proprietary management suite is there is no additional cost for remote access to the system like you might get with other manufacturers.

GPUs

As we said earlier these SMX2 GPUS are top notch when it comes to performance. This system will accept both the NVIDIA Tesla P100 featuring Pascal architecture, or the Tesla V100 featuring Volta architecture. Our system will be outfitted with the NVIDIA Tesla V100 GPUs, both in SMX2 form factors. The V100 kicks ass over the P100 basically in all categories. It is quite literally 3X faster than the Tesla P100.

It’s also available in a 16GB and 32GB version. Both are also designed for deep learning and machine learning applications, and feature error correcting code (ECC) memory. So aside from being super fast, the Tesla P100 and V100 have ECC memory, which is not something you would get on an implementation using the RTX and Titan versions. Also, no Tensor cores with Volta architecture, so RTX also loses out on the coolness naming factor. I can’t say the same for Titan. With no ECC, the system is unable to alert the user to single and double-bit errors, which have the ability to drastically skew results for applications where you would use a high-precision monstrosity.

Summary

The Gigabyte G481-S80 GPU server is not just fast, but stupid-crazy fast and for certain applications it can replace multiple CPU servers running the same applications. Expensive yes, but the advantages in time saved can far outweigh the initial expenditure.

If you’re interested in purchasing this server, click here! Or, if you’re interested in other servers or components, click here for IT Creations’ homepage.